Introduction

Welcome to our step-by-step tutorial on how to scrape Twitter followers. Social media data can provide valuable insights, and Twitter is no exception. Whether you're a researcher, marketer, or simply curious about your Twitter followers, scraping their data can help you better understand your audience and make data-driven decisions.

In this tutorial, we will guide you through the process of scraping Twitter follower data responsibly and ethically. We'll cover the tools and techniques required, legal considerations, and the steps to retrieve and manage the data efficiently. By the end of this tutorial, you'll have the knowledge and skills to scrape Twitter followers effectively while respecting Twitter's terms of service.

Read This: Best Positive Twitter Accounts to Follow in 2023

Why Scrape Twitter Followers?

Twitter, with its massive user base and dynamic content, has become a goldmine of information for individuals and businesses alike. Scraping Twitter followers can be a valuable strategy for various purposes. Here are some compelling reasons why you might want to consider scraping Twitter followers' data:

- Market Research: By scraping Twitter followers, you can gain insights into your competitors' audience. Analyzing the followers of your competitors can help you identify potential leads, target demographics, and trends within your industry.

- Audience Analysis: For businesses and influencers, understanding your own Twitter followers is crucial. You can scrape data such as location, interests, and engagement levels to tailor your content and marketing strategies effectively.

- Content Personalization: With scraped follower data, you can create personalized content that resonates with your audience. By knowing their preferences and behaviors, you can craft tweets that are more likely to go viral or generate engagement.

- Community Building: Building a strong online community is essential for brand loyalty. By scraping follower data, you can identify your most active and influential followers, allowing you to nurture and engage with them more effectively.

While these reasons make scraping Twitter followers appealing, it's important to emphasize that this process must be carried out responsibly and ethically. Always respect Twitter's terms of service and the privacy of your audience. Unauthorized or unethical scraping can lead to legal issues and damage your online reputation.

Benefits of Scraping Twitter Followers Responsibly

When done correctly, scraping Twitter followers can provide several benefits:

| Benefit | Description |

|---|---|

| Insightful Data | Access valuable data about your audience and competitors, including demographics, interests, and engagement metrics. |

| Effective Marketing | Create targeted marketing campaigns and personalized content that resonates with your followers. |

| Competitive Advantage | Stay ahead of the competition by understanding their audience and tailoring your strategies accordingly. |

| Community Engagement | Build stronger connections with your followers, enhancing brand loyalty and trust. |

In conclusion, scraping Twitter followers can offer valuable insights and advantages for businesses, marketers, and individuals. However, it must be approached with responsibility, ethics, and compliance with Twitter's policies to ensure long-term success and positive outcomes.

Read This: Most Followed Account on Twitter in Pakistan

Legal and Ethical Considerations

Before embarking on any web scraping project, especially one involving Twitter followers, it's crucial to be aware of the legal and ethical aspects associated with data scraping. Failing to do so can result in legal consequences and damage to your reputation. Let's explore the key considerations:

1. Twitter's Terms of Service

Twitter's Terms of Service: Twitter has strict terms and policies governing the use of its platform and data. Ensure you read and comply with Twitter's Developer Agreement and Policy, Automation Rules, and any other relevant guidelines. Violating these terms can lead to the suspension of your Twitter account and legal action.

2. Respect for Privacy

Privacy: When scraping Twitter follower data, be mindful of user privacy. Avoid collecting sensitive or personally identifiable information without consent. Focus on publicly available data and respect users' preferences for privacy settings.

3. Rate Limiting and API Usage

Rate Limiting: Twitter's API has rate limits to prevent excessive data requests. Make sure to adhere to these limits to avoid being blocked or banned. Consider using proper authentication methods and handling rate limits gracefully in your scraping code.

4. Attribution and Data Usage

Attribution: If you use scraped Twitter data for public purposes, consider giving attribution to Twitter as the data source. This shows respect for Twitter's platform and policies. Always provide accurate and clear information about the data source when sharing it.

5. Ethical Scraping

Ethical Scraping: Scrutinize your scraping practices. Avoid spamming, harassing users, or scraping data with malicious intent. Your scraping should serve a legitimate and ethical purpose, such as research, analysis, or improving user experience.

6. User Consent

User Consent: If you plan to use scraped data for commercial purposes, consider obtaining consent from users whose data you collect. This can help mitigate legal risks and build trust with your audience.

7. Data Security

Data Security: Safeguard the scraped data to prevent data breaches or leaks. Implement secure storage and transmission practices to protect both user data and your reputation.

8. Stay Informed

Stay Informed: Twitter's policies and regulations may change over time. Stay updated with the latest developments, and be prepared to adjust your scraping practices accordingly to remain compliant.

Conclusion

Responsible web scraping is essential to avoid legal issues and maintain a positive online presence. By adhering to Twitter's terms, respecting user privacy, and practicing ethical scraping, you can harness the benefits of scraping Twitter followers while upholding legal and ethical standards.

Read This: How to Find Old Twitter Profile Pictures: A Step-by-Step Tutorial

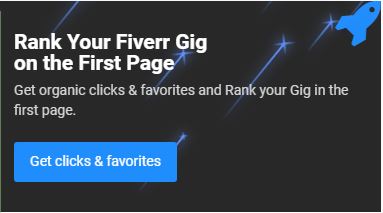

Tools and Libraries

Before diving into the process of scraping Twitter followers, it's essential to equip yourself with the right tools and libraries. These resources will help streamline your scraping project and ensure efficiency. Here's an overview of the key tools and libraries commonly used for this task:

1. Python

Python: Python is a popular programming language for web scraping due to its simplicity and a wealth of libraries. It offers powerful tools for making HTTP requests, parsing HTML, and handling data efficiently. You'll need a good grasp of Python to build your scraper.

2. Requests Library

Requests Library: The requests library in Python allows you to send HTTP requests to Twitter's servers. You can use it to access Twitter's data, such as user profiles and follower lists.

3. BeautifulSoup

BeautifulSoup: BeautifulSoup is a Python library for parsing HTML and XML documents. It helps extract data from the web pages you retrieve with the Requests library. You can use it to navigate the HTML structure and extract relevant information.

4. Twitter API

Twitter API: Twitter provides an API that allows authorized users to access Twitter data programmatically. To scrape Twitter followers responsibly, you'll need to create a Twitter Developer account, create an application, and obtain API keys and access tokens.

5. Tweepy

Tweepy: Tweepy is a Python library that simplifies the interaction with the Twitter API. It abstracts the authentication process and provides convenient methods for accessing user data, tweets, and follower information.

6. Scrapy

Scrapy: If you're planning a more extensive scraping project or need to crawl multiple Twitter profiles, Scrapy is a powerful web crawling and scraping framework in Python. It offers a structured way to build web spiders and scrape data at scale.

7. Data Storage Tools

Data Storage Tools: Depending on your project's size and requirements, you may need databases like MySQL, PostgreSQL, or NoSQL solutions like MongoDB to store the scraped Twitter follower data securely.

8. Jupyter Notebook

Jupyter Notebook: Jupyter Notebook is a fantastic tool for creating and sharing documents that contain live code, equations, visualizations, and narrative text. It's useful for documenting your scraping process and sharing your work with others.

9. Ethical Guidelines

Ethical Guidelines: Although not a tool or library, it's essential to have a clear set of ethical guidelines in place before you start scraping. Ensure that your scraping practices adhere to Twitter's policies and ethical standards.

Conclusion

With the right tools and libraries at your disposal, scraping Twitter followers' data becomes a manageable task. Python, Requests, BeautifulSoup, Tweepy, and ethical guidelines are your allies in this process. Remember to use these tools responsibly, respect user privacy, and comply with Twitter's terms and conditions to ensure a successful and ethical scraping project.

Read This: How to See Who Someone Recently Followed on Twitter 2022: A Complete Guide

Step 1: Setting up your Environment

Before you can start scraping Twitter followers, it's crucial to establish the right environment and configure the necessary tools and libraries. Follow these steps to set up your environment for a successful scraping project:

1. Install Python

Python: If you haven't already, install Python on your system. You can download the latest version from the official Python website (https://www.python.org/downloads/). Python is the foundation for many scraping tools and libraries.

2. Create a Virtual Environment

Virtual Environment: To manage dependencies for your project, it's a good practice to create a virtual environment. Use the following command in your terminal:

python -m venv myenv

Replace "myenv" with the name you want to give to your virtual environment. Activate it using:

source myenv/bin/activate

3. Install Required Libraries

Libraries: Install the necessary Python libraries using pip, the Python package manager. You'll need libraries like Requests, BeautifulSoup, and Tweepy for web scraping. Use the following commands:

pip install requestspip install beautifulsoup4pip install tweepy

4. Set Up Twitter Developer Account

Twitter Developer Account: To access Twitter data programmatically, create a Twitter Developer account at the Twitter Developer Platform (https://developer.twitter.com/en/apps). Create a new application and obtain your API keys and access tokens.

5. Configure API Keys

API Keys: Store your Twitter API keys and access tokens securely. You'll use these credentials to authenticate your requests to Twitter's servers. Keep them confidential and never share them in your code.

6. Create a Project Directory

Project Directory: Organize your scraping project by creating a dedicated directory. This directory will contain your Python scripts, data, and any other project-related files.

7. Set up Jupyter Notebook (Optional)

Jupyter Notebook: If you plan to document your scraping process interactively, install Jupyter Notebook with the following command:

pip install jupyter

You can then start a Jupyter Notebook session using:

jupyter notebook

Now, you're ready to move on to the next steps in the Twitter follower scraping process. Setting up your environment correctly is essential for a smooth and efficient scraping experience.

Read This: Best Twitter Accounts to Follow for Quotes in 2023

Step 2: Authentication

Authentication is a crucial step when it comes to scraping Twitter data. Twitter requires users to authenticate their requests to ensure the security and privacy of its platform. In this step, we'll guide you through the process of authenticating with Twitter's API to access the data you need.

1. Twitter Developer Account

Twitter Developer Account: As mentioned earlier, you should have already created a Twitter Developer account and registered your application to obtain API keys and access tokens. If you haven't done so, please refer to "Step 1" for instructions.

2. API Keys and Tokens

API Keys and Tokens: You should have the following credentials ready:

| Credential | Description |

|---|---|

| API Key | This is also known as the "consumer key." It identifies your application to Twitter's servers. |

| API Secret Key | Similar to the API key, this is used for secure communication between your application and Twitter. |

| Access Token | Access tokens represent the Twitter user who authorized your application to access their data. |

| Access Token Secret | This token complements the access token and is used for secure communication. |

3. Python Code for Authentication

Python Code: To authenticate with Twitter's API in your Python script, use the Tweepy library. Here's a code snippet to set up authentication:

import tweepy

consumer_key = "Your_Consumer_Key"

consumer_secret = "Your_Consumer_Secret"

access_token = "Your_Access_Token"

access_token_secret = "Your_Access_Token_Secret"

# Authenticate

auth = tweepy.OAuthHandler(consumer_key, consumer_secret)

auth.set_access_token(access_token, access_token_secret)

# Create the API object

api = tweepy.API(auth)

Replace "Your_Consumer_Key," "Your_Consumer_Secret," "Your_Access_Token," and "Your_Access_Token_Secret" with your actual credentials. This code initializes the Tweepy API object with your authentication details.

4. Testing Authentication

Testing: To verify that your authentication is working correctly, you can make a simple API request. For example, you can retrieve your own user information:

user = api.me()

print(f"Authenticated as: {user.screen_name}")

If the authentication is successful, you will see your Twitter username printed to the console.

With authentication in place, you're now ready to proceed to "Step 3: Scraping Twitter Followers," where we'll explore how to retrieve follower data responsibly and effectively.

Read This: How to Watch Twitter on Roku: A Step-by-Step Guide

Step 3: Scraping Twitter Followers

Now that you've set up your environment and authenticated with Twitter's API, it's time to start scraping Twitter followers' data. In this step, we'll guide you through the process of retrieving follower information effectively and responsibly.

1. Choose the Target User

Target User: Determine whose followers you want to scrape. You can choose to scrape the followers of a specific Twitter user, which can be yourself, a competitor, or any public account.

2. Retrieve Follower IDs

Follower IDs: Twitter's API allows you to retrieve follower IDs, which are unique identifiers for each follower. You can use the Tweepy library to request these IDs. Here's an example:

target_username = "target_user"

follower_ids = []

for page in tweepy.Cursor(api.followers_ids, screen_name=target_username).pages():

follower_ids.extend(page)

Replace "target_user" with the Twitter username of the account whose followers you want to scrape. The code above retrieves follower IDs and stores them in the "follower_ids" list.

3. Fetch Follower Data

Follower Data: With the follower IDs collected, you can now fetch data about each follower individually. Make requests to the Twitter API to retrieve user profiles. Be mindful of rate limits to avoid overloading the API. Here's a simplified example:

follower_data = []

for user_id in follower_ids:

try:

user = api.get_user(user_id)

follower_data.append({

"Username": user.screen_name,

"Name": user.name,

"Followers": user.followers_count,

"Description": user.description,

# Add more desired attributes

})

except tweepy.TweepError:

# Handle exceptions, such as private accounts

pass

This code fetches user data for each follower, including their username, name, follower count, and description. You can customize the attributes you want to collect.

4. Data Storage

Data Storage: Store the scraped follower data in a suitable format. You can use libraries like Pandas to create dataframes or save the data to a database for further analysis.

5. Respect Rate Limits

Rate Limits: Twitter's API has rate limits to prevent abuse. Be sure to respect these limits to avoid temporary bans. You can implement rate limiting logic in your code to handle this responsibly.

6. Handling Errors

Error Handling: Be prepared to handle exceptions, such as private accounts or rate limit exceeded errors, gracefully in your code to ensure a smooth scraping process.

Remember that scraping Twitter followers should be done ethically, respecting Twitter's terms of service and user privacy. Avoid excessive scraping, spamming, or scraping sensitive data.

With these steps, you can effectively scrape Twitter followers' data for your analysis, research, or marketing purposes. In the next step, "Step 4: Data Handling and Storage," we'll explore how to manage and analyze the collected data efficiently.

Read This: Best Twitter Accounts to Follow in South Africa in 2023

Step 4: Data Handling and Storage

Once you've successfully scraped Twitter followers' data, it's essential to manage and store the information efficiently for analysis or further use. In this step, we'll guide you through best practices for handling and storing your scraped data.

1. Data Cleaning and Preparation

Data Cleaning: Start by cleaning and preparing your data. Remove duplicates, handle missing values, and format the data consistently. This step ensures the data's quality and reliability for analysis.

2. Data Analysis

Data Analysis: Depending on your project's objectives, you can perform various analyses on the scraped data. Explore follower demographics, engagement metrics, and other relevant insights to gain a better understanding of your audience.

3. Visualization

Visualization: Visualize your findings using charts and graphs to make the data more accessible and understandable. Tools like Matplotlib and Seaborn in Python can help create informative visualizations.

4. Export Data

Export Data: After analysis, export the data to a format suitable for your needs. Common options include CSV, JSON, or even a database for larger datasets. Ensure you maintain data integrity during the export process.

5. Data Security

Data Security: Protect your scraped data by storing it securely. Implement encryption and access controls if necessary, especially if the data contains sensitive information. Security breaches can have severe consequences.

6. Backup

Backup: Regularly back up your scraped data to prevent data loss in case of unforeseen issues or system failures. Automated backup solutions can be particularly helpful.

7. Compliance and Privacy

Compliance and Privacy: Continue to adhere to Twitter's terms of service and privacy regulations. Be cautious about sharing or distributing the scraped data, especially if it contains personally identifiable information.

8. Documentation

Documentation: Maintain documentation of your scraping process, data cleaning steps, and analysis methodologies. This documentation will be valuable if you need to revisit or share your project with others.

9. Ethical Use

Ethical Use: Use the scraped data ethically and responsibly. Avoid spamming or any activities that could violate Twitter's policies or harm individuals' privacy.

10. Insights and Action

Insights and Action: Finally, leverage the insights gained from your scraped data to inform your strategies, whether in marketing, content creation, or research. Use the data to make informed decisions and take action accordingly.

By following these best practices for data handling and storage, you can ensure that the data you've scraped from Twitter followers remains valuable and secure. It can be a powerful resource for understanding your audience and making data-driven decisions.

In the next and final step, "Step 5: Conclusion," we'll summarize the key takeaways and emphasize the importance of responsible web scraping.

Read This: Top Russian Twitter Accounts to Follow in 2023

FAQ

Here are some frequently asked questions (FAQs) about scraping Twitter followers and related considerations:

1. Is scraping Twitter followers legal?

Scraping Twitter followers can be legal, but it must be done within the bounds of Twitter's terms of service and applicable laws. Unauthorized or unethical scraping can lead to legal consequences and account suspension.

2. Can I scrape data from private Twitter accounts?

No, scraping data from private Twitter accounts without permission is a violation of both Twitter's policies and user privacy. Always respect users' privacy settings and obtain consent if required.

3. How can I prevent rate limiting when scraping Twitter data?

To avoid rate limiting, implement rate limiting logic in your scraping code to ensure you don't exceed Twitter's rate limits. You can also consider using Twitter's Premium APIs for higher rate limits, though they may require a subscription.

4. What can I do with the scraped Twitter follower data?

The scraped data can be used for various purposes, such as market research, audience analysis, content personalization, and community building. The key is to use the data responsibly and ethically to benefit your objectives.

5. How do I handle errors when scraping Twitter data?

You should implement error handling in your code to manage exceptions gracefully. For example, when encountering private accounts or rate limit exceeded errors, your code should handle them without disruption and continue scraping where possible.

6. Do I need to get permission to scrape Twitter follower data?

If you're scraping your own Twitter followers, no permission is typically required. However, when scraping data from other Twitter users, especially for commercial or research purposes, it's a good practice to obtain their consent or ensure compliance with applicable laws and regulations.

7. What tools and libraries are commonly used for scraping Twitter data?

Commonly used tools and libraries for scraping Twitter data include Python, Requests, BeautifulSoup, Tweepy, and Scrapy. These tools provide the necessary functionality for making requests to Twitter's servers, parsing HTML, and handling data efficiently.

Remember that responsible and ethical scraping practices are essential when working with Twitter data. Always prioritize user privacy and comply with Twitter's terms of service to avoid legal issues and maintain a positive online presence.

Read This: Top 10 Twitter Influencers With the Most Followers in 2023

Conclusion

In this comprehensive guide, we've walked you through the process of scraping Twitter followers' data responsibly and effectively. We covered essential steps, best practices, and considerations to ensure a successful scraping project. Here's a summary of key takeaways:

1. Legal and Ethical Compliance

Always adhere to Twitter's terms of service and policies when scraping Twitter data. Respect user privacy, obtain consent when necessary, and avoid unethical practices that could harm users or violate regulations.

2. Setting up Your Environment

Properly configure your development environment by installing Python, creating a virtual environment, and setting up essential libraries like Requests, BeautifulSoup, and Tweepy. Obtain Twitter API keys and tokens for authentication.

3. Authentication

Use Tweepy to authenticate your requests with Twitter's API. Protect your API keys and tokens, and ensure your authentication process is correctly implemented to access Twitter data securely.

4. Scraping Twitter Followers

Choose your target Twitter user and retrieve follower IDs using Tweepy. Fetch follower data individually, handle errors gracefully, and respect rate limits to avoid disruptions.

5. Data Handling and Storage

After scraping, clean and prepare your data for analysis. Perform data analysis, visualize your findings, and export the data to a suitable format. Prioritize data security, backups, and ethical use of the scraped data.

By following these steps and best practices, you can harness the power of scraping Twitter followers' data for market research, audience analysis, content personalization, and more. Remember that responsible scraping not only ensures legal compliance but also maintains your online reputation as a conscientious data practitioner.

Scraping Twitter followers is a valuable tool when used ethically and responsibly. Continuously stay informed about Twitter's policies and industry regulations to adapt your scraping practices as needed. With the knowledge gained from this guide, you're well-equipped to embark on your Twitter scraping journey while upholding ethical standards and achieving your data-driven goals.